Visualize the data points as weights on one side of a balancing scale. Your task is to find the right combination of weights (coefficients) for the objects on the other side to balance the scale perfectly. Linear regression involves adjusting the weights until the scale is in equilibrium, which means the difference between the two sides is minimized. The weights‘ values and positions correspond to the coefficients of the linear equation, and once the scale is balanced, you can use this equation to make predictions for new weights.

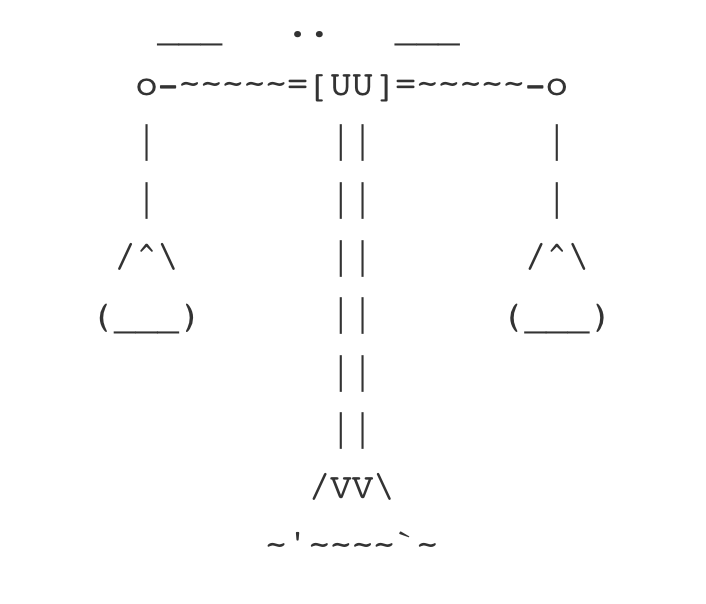

Unravel, don’t tangle.