The context window (context length) of a LLM is the length of the longest sequence of tokens that a LLM can use to generate a token. If a LLM is to generate a token over a sequence longer than the context window, it would have to either truncate the sequence down to the context window, or use certain algorithmic modifications.

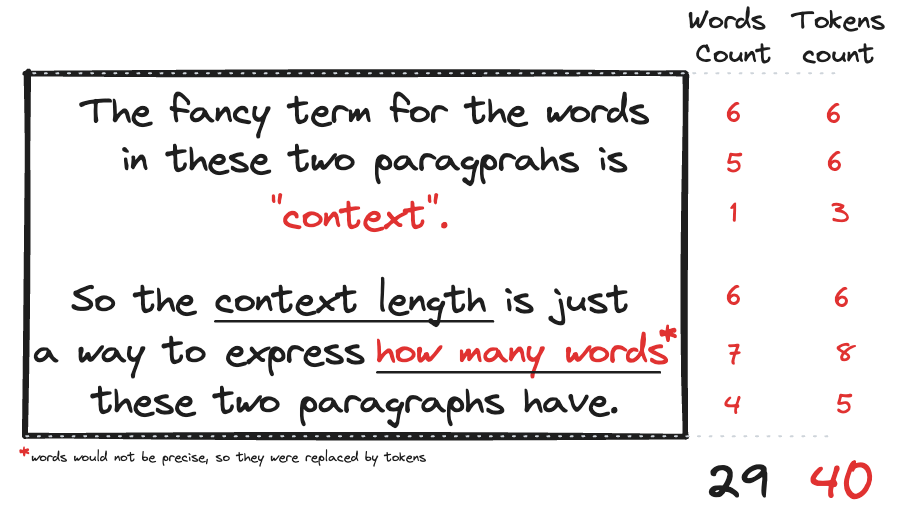

Ok, so the fancy term „context length“ is nothing else than a token count. And token count is nothing else than words count. Not quite. This is what the internet has to say about that:

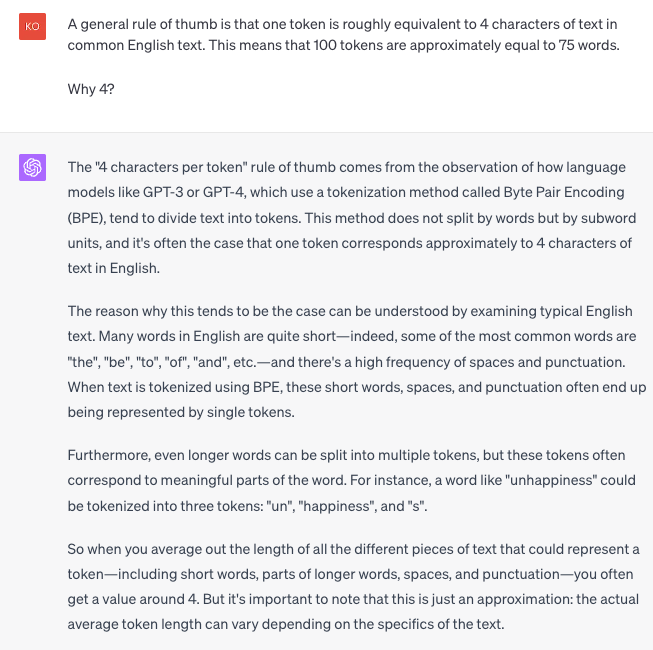

A general rule of thumb is that one token is roughly equivalent to 4 characters of text in common English text. This means that 100 tokens are approximately equal to 75 words.

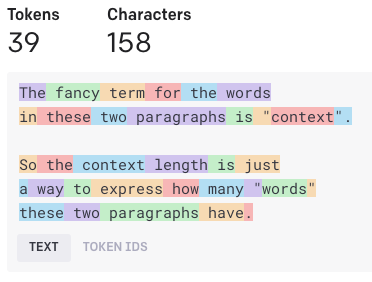

So if we take our two sentences and throw them into the OpenAI Tokenizer, this is what we get:

So is the internet correct about 4 characters approximation? Let’s ask chatGPT4:

Not quite. The approximation can actually confuse rather than clarify. So rather than using the approximation of 4, just remember that short words, spaces, and punctuations often end up being represented by single tokens.

Unravel, don’t tangle